How to Use Email Split Testing to Maximize Conversions: A Step-by-Step Guide

Imagine this: You’ve spent hours crafting the perfect email. The subject line? Chef’s kiss. The content? Pure gold. You hit send, sit back, and wait for the magic to happen. But instead of a flood of opens and clicks, you get… crickets.

Sound familiar?

Here’s the truth: even the most seasoned email marketers don’t have a crystal ball. But what they do have is a secret weapon that takes the guesswork out of email marketing and replaces it with cold, hard data. It’s called Split Testing (or A/B testing).

Think of it as your email marketing lab—where you get to experiment, test, and discover exactly what makes your audience tick. The best part? You don’t need to be a data scientist or a marketing guru to pull it off.

In this blog, we’re breaking down everything you need to know about split testing—no jargon, no fluff, just actionable tips to help you send emails that actually convert. Ready to stop guessing and start winning? Let’s dive in.

What is Split Testing in Email?

Split testing in email marketing is a method of comparing two or more versions of an email to see which one performs better.

It’s simple: you send two (or more) versions of an email to your audience, see which one performs better, and then roll with the winner. Think of it as a friendly competition between your subject lines, CTAs, layouts, or whatever you’re testing.

So, in one line if you want to know how to split test, choose one variable, create two (or more) versions, send them to different audience segments, and compare performance to find the winner. That’s it.

Pro-Tip: If you are looking to a simple guide to learn the basics of Split testing, feel free to check out this blog!

Is There any Difference Between the A/B Test and the Split Test?

Yes, A/B tests and split tests are often used interchangeably, and in most cases, they refer to the same concept—testing two versions of something (like an email, webpage, or ad) to see which one performs better.

But here’s a small difference:

- A/B Test usually means testing two versions (A vs B)

- Split Test can involve more than two versions (A vs B vs C, D, E, etc.)

In day-to-day marketing or SaaS conversations, though, both terms are used synonymously.

So you’re totally fine using either, especially when you’re comparing two email sequences, as in your example.

Now, let’s talk about how to do it right.

How to Use Split Testing to Boost Email Conversions

Alright, let’s get down to business. You know why split testing matters, and you’ve got the basics of what it is. Now it’s time to roll up your sleeves and learn how to use it to supercharge your email conversions.

In this section, we’re diving into the how-to of split testing like a pro. From crafting killer hypotheses to testing the right elements (and avoiding rookie mistakes), we’ve got you covered. By the end, you’ll have a clear roadmap to turn your emails into conversion machines.

1. Start with a Hypothesis, Not a Hunch

Don’t test just for the sake of testing. Always start with a clear reason behind your test. Random experimentation wastes time and doesn’t give you meaningful insights. You need to know why you’re testing something in the first place.

- Bad example: “Let’s test something. Anything. Whatever.”

- Good example: “I think using first names in subject lines will increase opens. Let’s test it.”

So before you hit send ask yourself: What do I want to learn?

Think like a scientist. A/B testing isn’t about randomly changing things just to see what happens. It’s about making educated guesses, testing them in a controlled way, and using real data to guide your decisions. Strategic testing leads to real improvements.

2. Segment Your Audience Well

Here’s a hard truth: not everyone on your email list is the same. Sure, they all signed up to hear from you, but that doesn’t mean they’ll all respond to the same email content. Every segmentation statistics will say the same thing.

That’s where segmentation comes in. From your audience, some subscribers might like to email subject lines with emojis, but others might not.

Segmentation lets you tailor your email campaigns to different groups, so you’re serving up exactly what they want.

For example:

- New subscribers might respond better to a welcome email with a special discount.

- Loyal customers might prefer exclusive sneak peeks or VIP offers.

- Inactive subscribers might need a re-engagement campaign with a bold subject line to grab their attention.

By segmenting your contact list before you test, you’re not just guessing—you’re strategically targeting the right people with the right message. This means clearer insights, better results, and a whole lot less wasted effort.

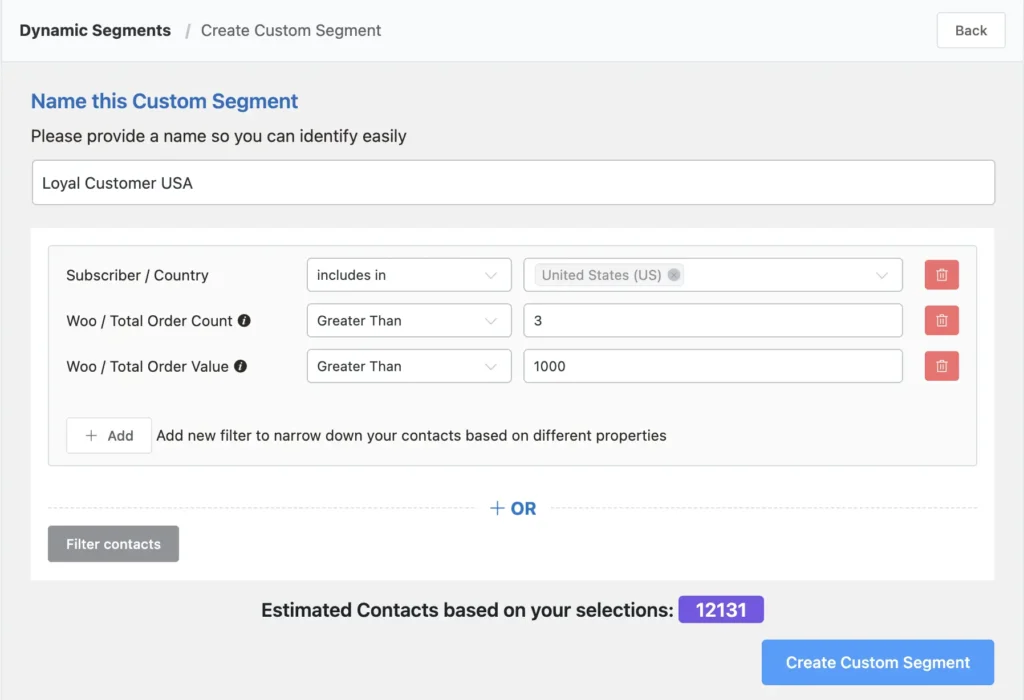

🔥 Pro tip: Start with basic segments like demographics, purchase history, or engagement levels. Then, as you get more comfortable, dive deeper into behavior-based segments (like cart abandoners or frequent buyers). The more specific you get, the more your email campaigns will shine. FluentCRM’s advanced segmentation can help you with effortless segmentation.

3. Test One Thing at a Time (Seriously)

You’ve probably heard this a hundred times—but trust me, it’s super important. If you test the subject line, the call-to-action, and the layout all at once, how will you ever know what made a difference?

You won’t. It’ll just be a guessing game. So, keep it simple. Test one thing at a time.

Pick one variable—like the subject line, the CTA button, the image, or the email header. That’s it. One at a time.

And here’s another tip: Make the change obvious.

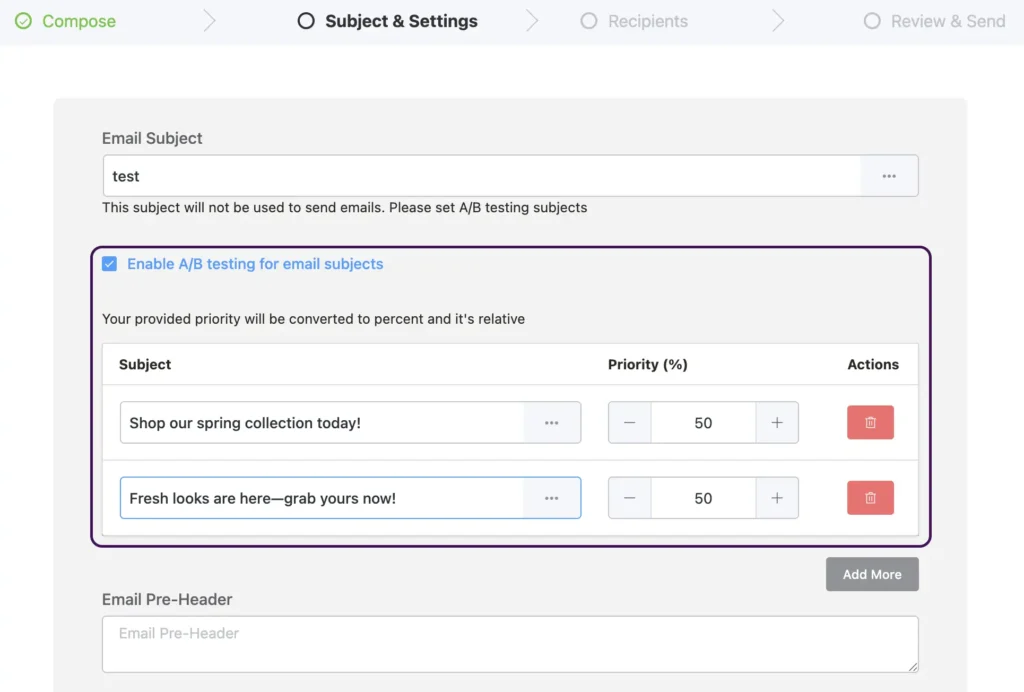

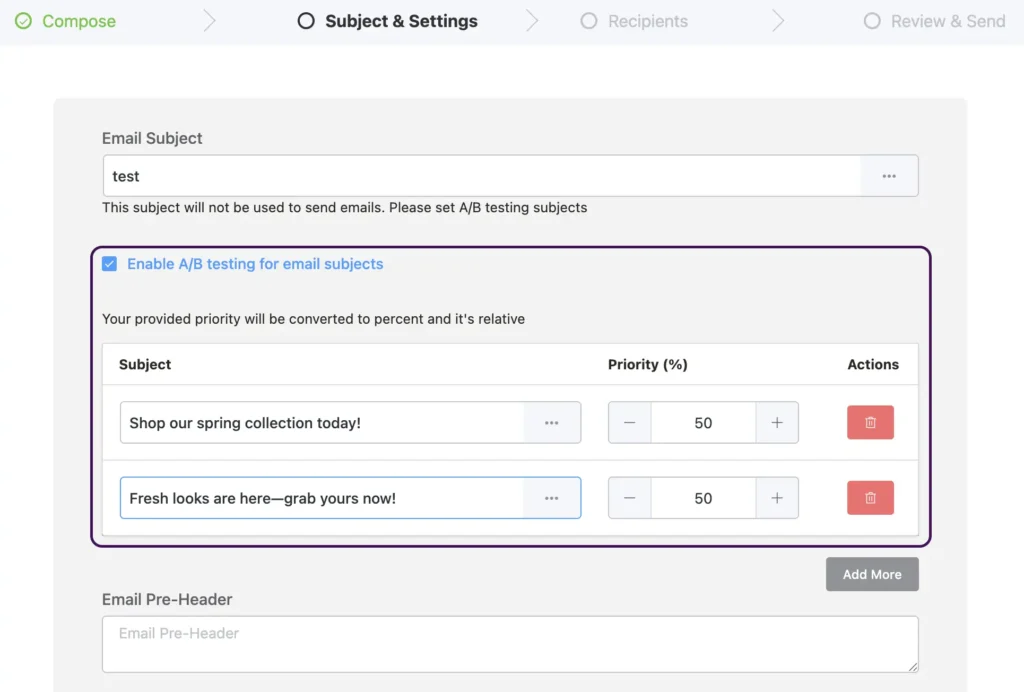

Tiny tweaks (like changing one word or swapping a comma) usually won’t show clear results. If you’re testing a headline, don’t just change “Buy Now” to “Shop Now.” Try a completely different tone or message.

For example:

- Version A: “Shop our spring collection today!”

- Version B: “Fresh looks are here—grab yours now!”

See the difference? It’s clear, bold, and easy to measure.

The goal is to get clear insights, not confusing data. So take it slow, test smart, and have fun seeing what actually clicks with your audience.

4. Size (and Timing) Matters

Let’s be real—split testing only works if you have enough people to test with. Testing two email versions on just 20 subscribers? That’s not going to tell you much. You need a decent sample size to get meaningful results.

Ideally, send each version to a few hundred subscribers at least. The bigger the group, the clearer your data will be.

Also, give your test enough time to breathe. Don’t check results after just an hour and call it a day. A good window is 24–48 hours. That way, you’re collecting data from people in different time zones and habits.

Timing also matters. Don’t run your test on an unusual day like a public holiday (unless that’s part of your usual email schedule). Choose a ‘time of the day’ for your users and test.

Always test on days when your audience normally engages—so you get real, reliable insights that actually help you improve.

5. Test the Right Elements (Not Just the Obvious Ones)

Sure, everyone talks about testing subject lines—but there’s so much more you can play with! Some of the smaller things can have a big impact, so don’t stop at the basics.

You can test things like:

- Sender Name: Does your audience respond better to your brand name or a real person’s name?

- Preheader Text: Should it tease the content or give a quick summary?

- CTA Button: “Buy Now” vs “Grab Your Deal” vs “Start Today”—which one clicks more? Test on Button color as well.

- CTA Placement: Try it at the top, middle, or end of your email.

- Email Length: Short and snappy or longer and detailed?

- Layout: One-column or two-column? Text-heavy or image-heavy?

- Images: Product shots, lifestyle photos, static images, or even a fun GIF? Experiment with visual elements.

- Email Template: Which one works better? Which color scheme goes better?

- Personalization: First-name intros or behavior-based content?

6. Segment Before You Send

Split testing is great on its own. But when you mix it with segmentation, that’s when things get really exciting. Why?

Because not everyone on your list thinks the same way. Different groups respond to different things.

Let’s say you find that Segment A loves subject lines with emojis—they open your emails right away. But Segment B? They prefer plain, no-fuss subject lines. If you only test your whole list as one big group, you might miss these little gems.

That’s why it’s smart to test within segments. Segment your list into smaller groups based on age, location, interests, or past behavior—and test each one separately. You’ll learn what makes each group tick and how to talk to them better.

It’s like fine-tuning your message for the right ears. Small change, big results. So go beyond the basics—start testing smart.

7. Let the Data Talk (Even If It Hurts Your Feelings)

Sometimes, you might really like one version of your email. It looks great, it sounds catchy—you’re convinced it’s the best. But if your audience isn’t responding to it, tough love: it’s got to go. What you like doesn’t matter as much as what works. Always trust the data.

And don’t just look at one number. Maybe Version A got more opens—but what if Version B got fewer opens but way more clicks and sales? Then B is the real winner.

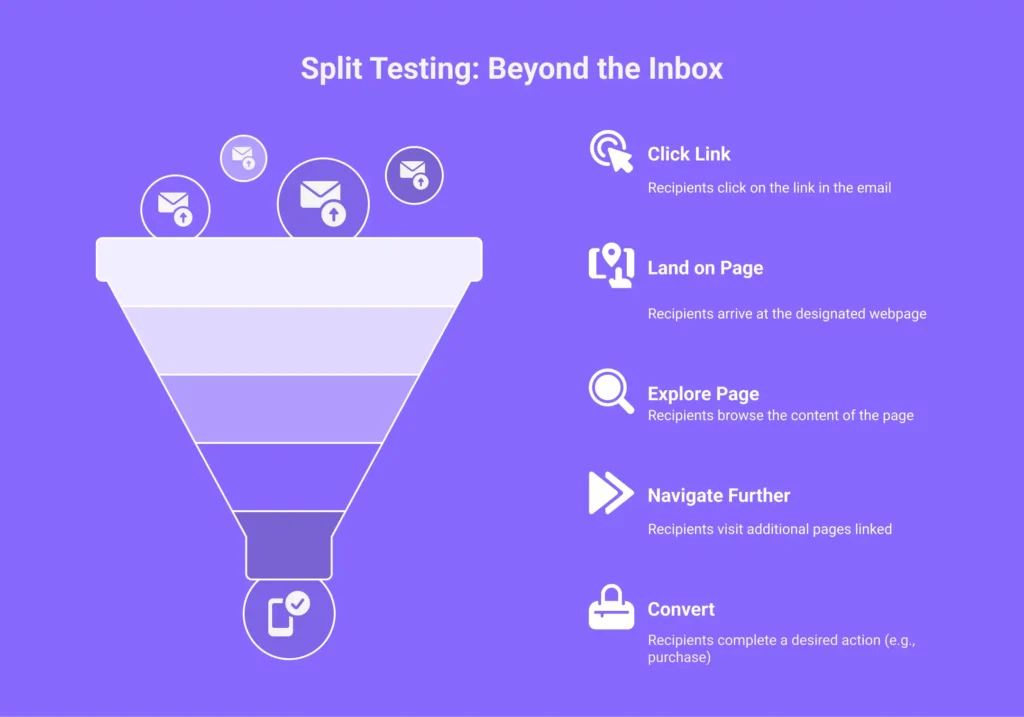

Remember, email success isn’t just about the first step (like open rate). You’ve got to look at the entire funnel: opens, clicks, conversions. That’s how you spot what’s really driving results.

So take a step back, look at the full picture, and let the numbers guide your decisions. Your opinion doesn’t pay the bills—results do.

8. Test Pre and Post-Click Behavior

Testing isn’t just about what happens in the inbox. Sure, it’s fun to see how many people open your email, but what really matters is what happens after they click. Do they stick around? Do they buy something?

Here’s the deal: you need to test beyond the open rate. Don’t just test what happens in the inbox. Look at what happens when people land on your page. Look beyond.

- Do they click?

- Do they convert?

- Do they spend more time on the page?

- Are they bouncing off the landing page?

- Do they go to another page linked to the email?

Tying your email results to actual outcomes is key. Vanity metrics, like open rates or click rates, are nice, but they don’t mean much if they don’t lead to conversions.

So, track everything—clicks, time on the page, bounce rates—and see how your email really impacts your goals.

9. Make Testing a Habit, Not a One-Time Thing

Split testing isn’t a “set it and forget it” strategy. The more you test, the smarter your email campaigns will get over time. It’s all about constant improvement. Keep iterating, keep optimizing.

To optimize your test better, you can:

- Create a testing calendar.

- Document what you learn.

- Share results with your team (or future self).

Also, document what you learn from each test. Keep a log of what worked, what didn’t, and why. This will help you spot trends over time and avoid repeating mistakes.

Finally, don’t keep all your insights to yourself. Share your results with your team (or with your future self). It keeps everyone on the same page and helps everyone make better decisions moving forward.

Testing should be an ongoing process—keep iterating, keep optimizing!

Common Split Testing Mistakes to Avoid

Split testing is like a superpower for improving your email campaigns, but if you’re not careful, it’s easy to get it wrong. Making a few common mistakes can lead to inaccurate results, which means missing out on valuable insights.

But don’t worry! If you know what to avoid, you can set yourself up for smarter, more effective testing.

Dive into the most common split-testing mistakes to dodge them like a pro:

- Testing too many things at once

- Using too small of an audience

- Ending tests too soon

- Ignoring post-click behavior

- Forgetting to act on insights from past tests

- Not using consistent KPIs for comparison

- Assuming one test result applies to all audiences

- Testing only one version per test

- Not setting clear goals before testing

- Focusing too much on vanity metrics (opens, clicks)

- Overcomplicating the test (keep it simple!)

- Relying on gut feelings instead of data

- Not testing enough variations over time

By keeping these mistakes in check, you’ll be in a much better spot to run successful tests and learn exactly what works for your audience.

Real-life Example of Split Testing

The best way to learn is by seeing it in action. That’s why we’re diving into some real-life split testing examples that’ll show you exactly how the pros do it.

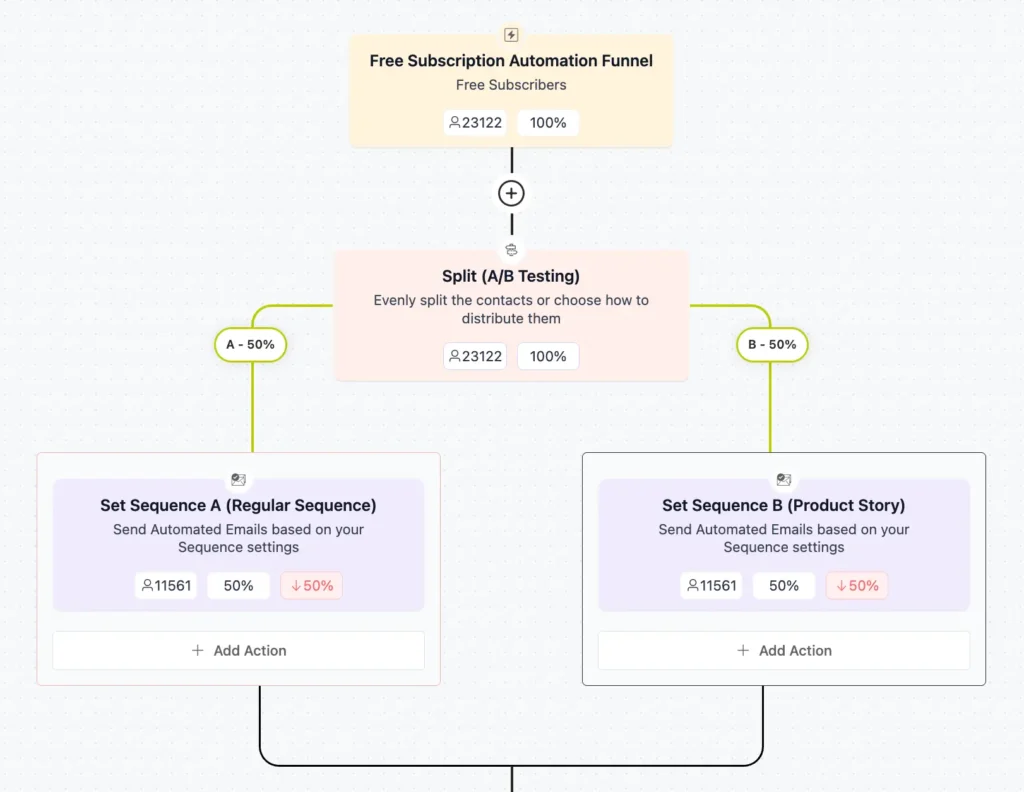

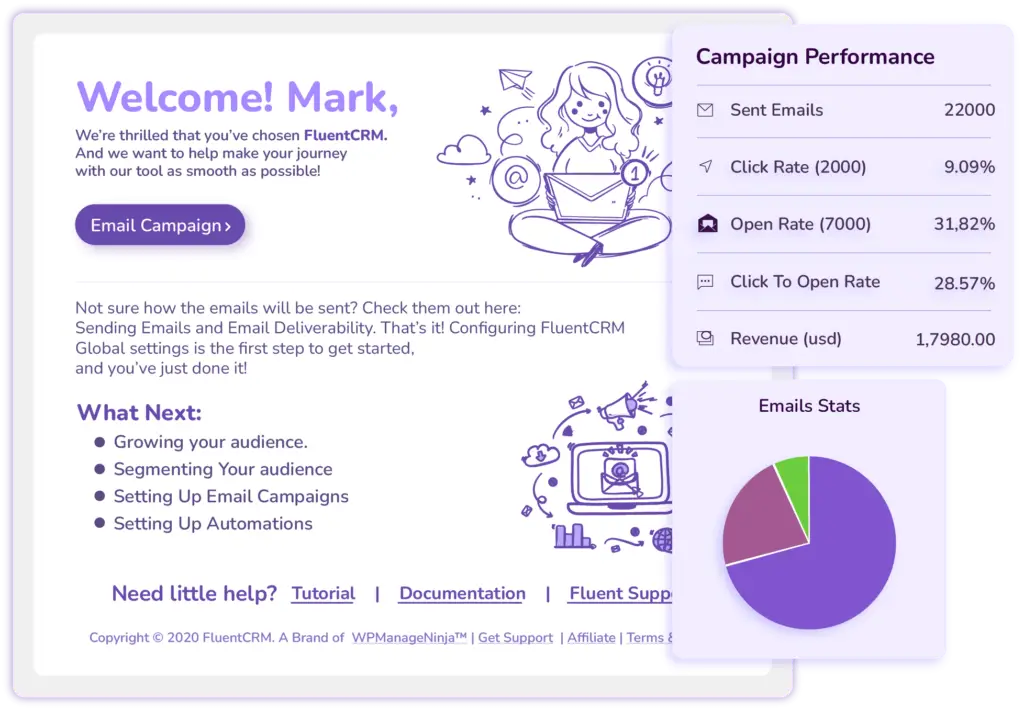

FluentCRM

At FluentCRM, we ran a split test with two onboarding email sequences—one with our usual getting-started tips and another that shared our journey, how we built FluentCRM, and how we use it ourselves.

The storytelling version outperformed the standard one, boosting conversions by 23%!

It clearly showed us that people don’t just want instructions—they want connection. And real stories make a real impact.

By the way, if you are interested in finding out more statistics related to email marketing, give this blog a read.

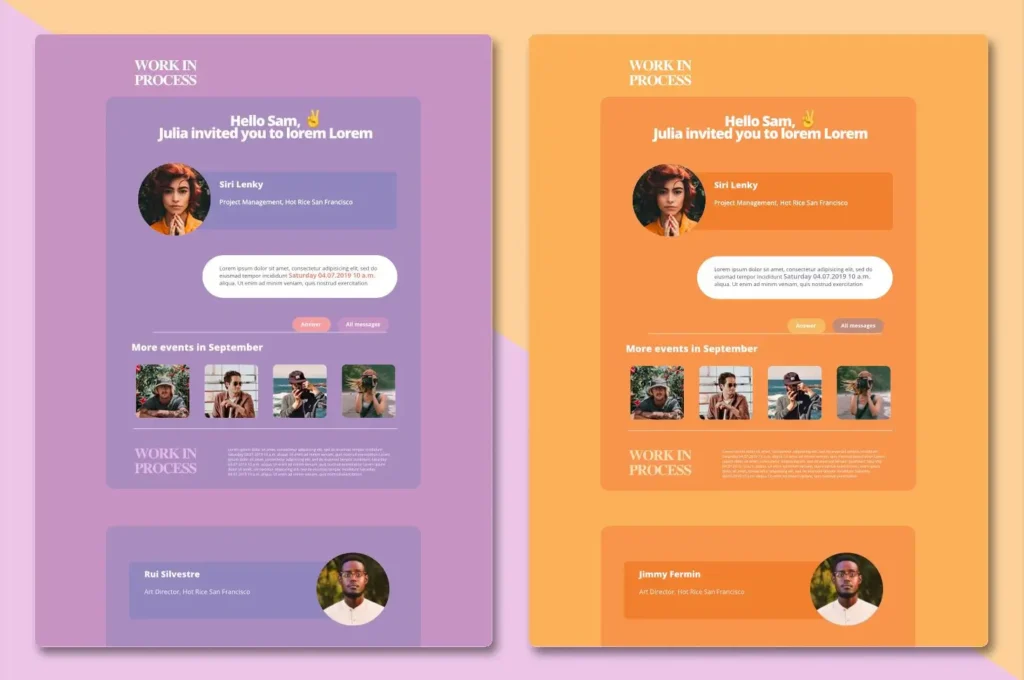

Omnisend

Here, you can see an example of Omnisend sending two emails with two different preheader texts, and running an A/B test between them.

Now of course to expect a result, any result they segmented their subscribers into two groups in this case and analyzed the result which worked better in which segment.

JUNE

Take an amazing split test example By JUNE. They kept their whole layout exactly the same, but they experimented with the background color of the layout.

Litmus

Litmus is even one step ahead in this case. They ran the test between the plain text email and the graphic email. And in their analysis, the plain text one performed better.

Metrics That Actually Matter (Beyond Open Rates)

Obviously, you’re reading this email to boost your conversion better. Now to check conversion we all used to rely on open rate. Well, thanks to Google and Yahoo’s new privacy updates, open rates aren’t always reliable. Focus on:

- Click-through rate (CTR)

- Conversion rate

- Revenue per email

- Time on page (after they click)

- Bounce rate on landing page

- Email Sharing Rate

- List growth rate

- Spam Complaints

These are the metrics that tell the real story. So, when you create a split test from now on, keep these metrics in your mind.

Pro-Tip: In this fast paced world of AI, marketing is changing. To keep up with the pace, these are some Key Performance Matrices (KPI) you must check!

Turn Data into Wins with Email Split Testing

Split testing isn’t just a fancy buzzword—it’s the backbone of a winning email marketing strategy. By testing, analyzing, and optimizing, you’re not just guessing what works; you’re making data-driven decisions that take your email marketing efforts to the next level.

From crafting a solid hypothesis to segmenting your email subscribers and using the right testing tools, every step you take brings you closer to understanding what truly resonates with your audience. And the best part? The more you test, the smarter your future email campaigns become.

Remember, email marketing isn’t a one-and-done deal. It’s an ongoing process of learning, tweaking, and improving. So, don’t be afraid to experiment, trust the data (even when it surprises you), and keep refining your approach!

Samira Farzana

Once set out on literary voyages, I now explore the complexities of content creation. What remains constant? A fascination with unraveling the “why” and “how,” and a knack for finding joy in quiet exploration, with a book as my guide- But when it’s not a book, it’s films and anime.

Leave a Reply